Recent advances in auditory reverse correlation

On the occasion of the PhD Defense of Aynaz Adl Zarrabi, the FEMTO Neuro group is hosting a mini-workshop on recent avances in auditory reverse correlation on Wed. July 9th, 2025, in Besançon, France.

Reverse correlation is a family of experimental and analytical methods in human psychophysics which takes inspiration from Wiener/Volterra theories of system identification. While reverse correlation has a long and prestigious history in neurophysiology and psychophysics, its relatively recent application to high-level auditory tasks such as music or language perception has lead to a burgeoning research community - with France as one of its local epicenters.

Following a number of similar previous events organized in Paris (2018) and Ghent (2024), this mini-workshop brings together 4 early-career CNRS PIs from Paris, Aix and Marseille for a morningful of recent advances in auditory reverse correlation.

The workshop is open to both experts and newcomers to the field. It will be held at the FEMTO-ST Institute in Besançon, in the Jean-Jacques Gagnepain amphithéatre, FEMTO TEMIS Building, 15B avenue des Montboucons, with possible attendance on zoom. Attendance is free, in the limit of available seats (no reservation taken). For non-FEMTO personnel, please be prepared to identify yourself with a mandatory ID document at the entrance counter. It is being organized thanks to the continued support of Fondation pour l’Audition

Information, contact: JJ Aucouturier (FEMTO-ST Institute, Besançon) & Marie Villain (Institut du Cerveau, Paris)

Program

| July 9th morning | Mini workshop | |

|---|---|---|

| 9:45am | JJ Aucouturier (FEMTO-ST Institute, Besançon) & Marie Villain (Institut du Cerveau, Paris) | Introduction |

| 10:00am | Dr Léo Varnet (Laboratoire des Systèmes Perceptifs, Paris | The microscopic impact of noise on phoneme perception and some implications for the nature of phonetic cues |

| 10:30am | Dr Etienne Thoret (Institut des Neurosciences de la Timone, Marseille) | Advancing the impact of machine learning in natural sciences by explaining black-box systems with reverse correlation |

| 11:00am | Dr Emmanuel Ponsot (Sciences et Technologies de la Musique et du Son, Paris) | Is Reverse-Correlation Appropriate for Uncovering (Para)linguistic Inferences from Speech Prosody? |

| 11:30am | Dr Ladislas Nalborczyk (Laboratoire Parole et Language, Aix-en-Provence) | Using reverse correlation to uncover the mental representation of one’s own voice |

| July 9th afternoon | PhD Defense | |

|---|---|---|

| 2:00pm | Aynaz Adl Zarrabi (FEMTO-ST Institute, Besançon) | PhD Defense (Reverse-correlation modeling of deficits of prosody perception in right-hemisphere stroke) |

9th July morning - Mini workshop

10:00am - Léo Varnet

Speaker: Dr Léo Varnet, Laboratoire des Systèmes Perceptifs, Paris

Title: The microscopic impact of noise on phoneme perception and some implications for the nature of phonetic cues

Abstract: The effect of background noise on speech perception is a multifaceted phenomenon. Psycholinguists often attribute the reduced intelligibility in noise to energetic masking: weak elements of the speech signal are not audible anymore and cannot contribute to recognition. However, the addition of randomness in the signal also contributes to the deleterious effect of noise. Adopting a reverse correlation approach, we explored how trial-by-trial variations in noise enveloppe influence phoneme categorization. This method revealed that noise not only masks but also interacts with phonetic cues in systematic ways, a phenomenon that we termed « microscopic effect of noise ». By focusing on this phenomenon, we obtained new insights into the hierarchical structure of mental representations of phonemes.

Bio: Leo Varnet is a CNRS researcher affiliated to École Normale Supérieure de Paris (Laboratoire des Systèmes Perceptifs). Originally trained as an engineer, he completed his PhD in neuroscience at Université Lyon 1 in 2016, 2016, followed by postdoctoral positions at ENS Paris and at University College of London (Speech Hearing and Phonetic Sciences department). His research focuses on hearing and speech, with an emphasis on the interface between the two domains: how does the brain decode sounds into phonemes, and breaks the continuous signal into words? What happens to these processes in case of a sensorineural hearing loss? His work combines computational auditory modeling and behavioral reverse correlation, aiming to develop a coherent framework for understanding speech perception.

Zoom link: https://cnrs.zoom.us/j/98877981395?pwd=QsQhIuMPBgUybaP2m51reocDkTFUxf.1

10:30 - Etienne Thoret

Speaker: Dr Etienne Thoret, Institut des Neurosciences de la Timone, Marseille

Title: Advancing the impact of machine learning in natural sciences by explaining black-box systems with reverse correlation

Abstract: Machine learning (ML) systems are widely used in natural sciences to make complex predictions but often work as black boxes for their users, offering them little insights into the underlying representations driving their predictions. While these systems typically indicate the presence of relevant information in the data, they rarely reveal what this information is, concealing the biological mechanisms being studied. This limitation hampers interpretability and reduces the potential for even more meaningful scientific insights. Reverse correlation, originally developed in neuroscience to decode perceptual and cognitive processes, offers a solution to this challenge. By randomly perturbing system inputs and analyzing their responses, this method uncovers the behaviors of ML systems, identifying the features most critical to their functioning and shedding light on their hidden representations. I will first introduce the method, explaining how input perturbations and output analyses can help uncover system behavior. Next, I will present a proof of concept demonstrating its use in developing a voice biomarker for characterizing sleep deprivation. Finally, I will explore broader applications spanning musical timbre characterization, ecoacoustic marker development, and unveiling cerebral representations in auditory neuroscience, highlighting the potential of reverse correlation to advance the impact of machine learning across diverse fields of natural sciences.

Bio: Etienne Thoret is a CNRS researcher at the Institut de Neurosciences de la Timone in Marseille, France. With a dual background in physics and acoustics, he completed his Ph.D. in Acoustics and Signal Processing at Aix-Marseille University in 2014, where he explored the relationship between gestures and sound. Prior to joining CNRS in 2023, he held postdoctoral positions at McGill University (Montreal), École Normale Supérieure (Paris), and Aix-Marseille University, working across audio signal processing, neuroscience, and explainable AI. His research aims to bridge auditory neuroscience, computational modeling, and sound ecology. He develops interpretable machine learning approaches to analyze auditory perception, voice, and neural data, with a particular interest in uncovering how the brain encodes meaningful acoustic patterns. His studies span a variety of domains, from the cortical representation of voices and sound textures to the consequences of sleep deprivation on voice acoustic properties and the impact of natural soundscapes on brain and behaviour. By integrating signal processing techniques with behavioral and neurophysiological data, he contributes to a deeper understanding of auditory cognition. His interdisciplinary work sheds light on the complex dynamics linking acoustic structures, neural codes, and perceptual experience—helping to develop tools for both basic research and societal applications, such as vocal biomarkers or acoustic indicators of biodiversity.

Zoom link: https://cnrs.zoom.us/j/98877981395?pwd=QsQhIuMPBgUybaP2m51reocDkTFUxf.1

11:00am - Emmanuel Ponsot

Speaker: Dr Emmanuel Ponsot, Sciences et Technologies de la Musique et du Son, Paris

Title: Is Reverse-Correlation Appropriate for Uncovering (Para)linguistic Inferences from Speech Prosody?

Abstract: Reverse-correlation applied to prosody provides a principled method for uncovering the prototypical representations underlying high-level inferences from speech signals. This approach holds promise for studying cross-cultural differences in emotional and social judgments, as well as impairments in individuals with specific pathologies or neurological disorders. However, a critical assumption of this method is that the brain’s inference algorithm can be approximated by linear template-matching—where prosodic judgments arise from comparing speech signals to a single internal prototype via a dot product. This assumption, though foundational, has never been empirically tested. In this talk, I will present two novel approaches to evaluate whether (para)linguistic inferences from prosody are well captured by linear template-matching, using existing published datasets. First, we explore alternative visualization techniques for reverse-correlation data to better discriminate between competing computational models. Second, we categorize trials based on linguistic-theoretical criteria and assess how effectively template-matching explains trial-by-trial responses across different categories. These directions aim to refine our understanding of the computational mechanisms behind prosodic inference and assess the validity of reverse-correlation in this domain.

Bio: Emmanuel Ponsot is a CNRS Researcher at the STMS Lab (Ircam, Paris). Trained as an Engineer at École Centrale de Lyon, he earned a Master’s degree in Acoustics in 2012 before transitioning to Psychophysics and Cognitive Sciences. He completed his Ph.D. at Sorbonne Université in 2015 and conducted postdoctoral research at Ircam, École Normale Supérieure (Paris), and Ghent University (Belgium), where he was supported by a Fondation pour l’Audition fellowship. His research relies on a tight integration of psychophysics, EEG, and computational modeling to study how the human auditory system processes complex sounds, such as speech, at both peripheral and central levels. His current projects explore diverse topics, including the neural and perceptual coding of auditory spectral shape and the computational mechanisms underlying social and emotional cognition in speech prosody. A key goal of his research is translating these findings, along with the novel experimental and computational tools developed, into refined audiological tools for individuals with hearing impairments or neurological conditions.

Zoom link: https://cnrs.zoom.us/j/98877981395?pwd=QsQhIuMPBgUybaP2m51reocDkTFUxf.1

11:30am - Ladislas Nalborczyk

Speaker: Dr Ladislas Nalborczyk, Laboratoire Parole et Language, Aix-en-Provence

Title: Using reverse correlation to uncover the mental representation of one’s own voice

Abstract: Most people experience a discrepancy between the sound of their recorded voice (digital self-voice) and the voice they hear while speaking (natural self-voice). This mismatch is often attributed to the fact that natural self-voice perception combines both air and bone conduction, while recorded voices are transmitted through air conduction alone. Although prior studies have attempted to improve self-voice identification through acoustic modifications, they have not identified a generalisable solution and have largely overlooked inter-individual variability. In this talk, I will present findings from a study that used reverse correlation to reveal the perceptual filters individuals rely on to identify their own voice. We recorded 93 participants uttering the syllable [a] and generated hundreds of modified versions of each recording using time-varying filters applied to speech-relevant frequency bands. Participants were then presented with 400 pairs of these modified voices and asked to select the one that best matched how they perceive their voice while speaking. This procedure enabled us to reconstruct each participant’s mental representation of their self-voice. Despite notable inter-individual variability, our results point to a shared filter, characterised by increased amplitude around speech formants (F1–F4), that supports natural self-voice recognition. These findings shed light on the perceptual mismatch between bone- and air-conducted self-voice and may guide the development of more effective voice modification tools, particularly for individuals who depend on text-to-speech communication systems.

Bio: Ladislas Nalborczyk is a CNRS researcher at the Laboratoire Parole et Langage (LPL). His research combines experimental (e.g., psychophysics, EMG, M/EEG, TMS) and computational (e.g., mathematical modelling, machine learning) methods to better understand the conscious experience, the cognitive mechanisms, and the neural underpinnings of inner speech (the mental production of speech), mental/motor imagery, and synesthesia. He completed a joint PhD in cognitive neuroscience and experimental psychology at Grenoble-Alpes University and Ghent University (Belgium) in 2019, on the topic of inner speech and rumination, under the supervision of Hélène Loevenbruck, Marcela Perrone-Bertolotti, and Ernst Koster. He then worked for 1 year as a postdoc in the team of Thomas Hueber and Laurent Girin at GIPSA-lab (Grenoble) on improving deep learning methods to decode overt and covert speech from intracranial (ECoG) data. In 2021, he obtained a 2-year ILCB postdoc grant to investigate the inhibitory mechanisms underlying inner speech and typing imagery, under the supervision of Xavier Alario and Marieke Longcamp, now at the CRPN. Before joining the LPL, he worked for 1.5 years at UNICOG/NeuroSpin (CEA) and the Paris Brain Institute (AP-HP, INSERM) under the supervision of Stanislas Dehaene and Laurent Cohen, on the neural dynamics underlying silent reading and tickertape synesthesia.

Zoom link: https://cnrs.zoom.us/j/98877981395?pwd=QsQhIuMPBgUybaP2m51reocDkTFUxf.1

9th July afternoon - PhD Defense

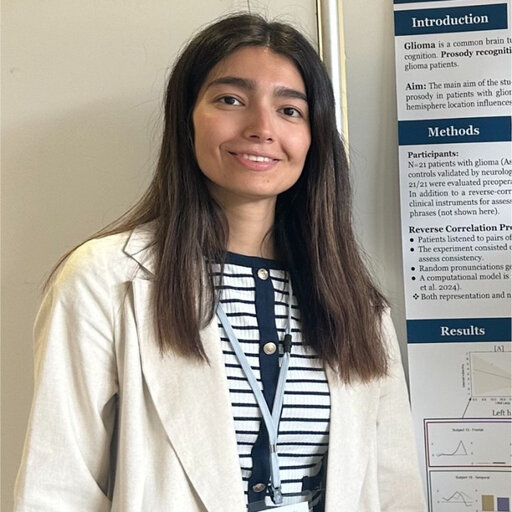

2:00pm - Aynaz Adl Zarrabi

PhD candidate: Aynaz Adl Zarrabi, FEMTO-ST Institute, Besançon

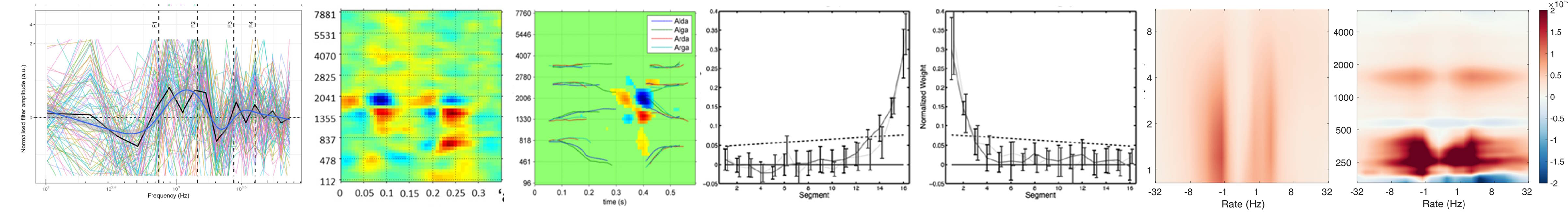

Title: Reverse-correlation modeling of deficits of prosody perception in right-hemisphere stroke

Abstract: This thesis aims to improve the diagnosis and understanding of prosody perception deficits in right-hemisphere stroke patients using the psychophysical technique of reverse correlation. While standard assessment tools lack the sensitivity and precision to detect such impairments, reverse correlation offers a means to quantify patients’ internal sensory representations and internal noise, providing insight into the cognitive and perceptual mechanisms disrupted by stroke. In Part I, we applied reverse correlation paradigms and classical analysis methods to behavioral data from stroke patients and healthy controls. This initial work revealed distinct pathological profiles and differences in perceptual kernels and internal noise. However, two critical limitations emerged: the low number of available trials and the presence of perseveration, repetitive responding that distorts response-stimulus mappings. These issues compromised the reliability of standard reverse-correlation techniques and motivated the development of more robust approaches. In Part II, we introduced several methodological contributions. Three novel techniques were developed to estimate internal noise without requiring double-pass trials, including a method based on GLM-derived confidence intervals. These methods were more accurate than classical approaches, particularly in low-trial scenarios. We also proposed a GLMHMM model to jointly estimate perceptual kernels and identify latent internal states, such as engagement and perseveration, based on both stimulus integration and response patterns. In Part III, we applied these refined tools to clinical data. The GLM-HMM revealed that much of the internal noise previously measured using classical techniques was inflated by perseverative behavior. Importantly, noise estimates derived from the new methods correlated with distinct clinical assessments, indicating improved construct validity. Transition probabilities between latent states emerged as new potential biomarkers: stroke patients were not more likely to enter perseverative states than controls but exhibited reduced ability to recover from them. Moreover, perseveration in patients was associated with slower response times and, before them, longer sequences of identical responses, suggesting a different cognitive mechanism than in controls. Notably, switches back to engagement tended to coincide with trials of lower difficulty / greater alignment between the stimulus and the patient’s internal kernel.

Committee

- M. Jean-Julien AUCOUTURIER DR CNRS, FEMTO-ST, Besançon Directeur de thèse

- Mme Anahita BASIRAT PU, SCALAB, Univ. Lille Rapportrice

- Mme Charlotte JACQUEMOT DR CNRS, IRMB/ENS, Paris Examinatrice

- M. Ladislas NALBORCZYK CR CNRS, LPL, Aix en Provence Examinateur

- M. Emmanuel PONSOT CR CNRS, STMS, Paris Invité

- M. Léo VARNET CR HDR, CNRS, LSP/ENS, Paris Rapporteur

- Mme Marie VILLAIN MCU HDR, Sorbonne Univ., Paris Co-encadrante

Zoom link: https://cnrs.zoom.us/j/98877981395?pwd=QsQhIuMPBgUybaP2m51reocDkTFUxf.1

PhD manuscript: Adl Zarrabi, A. (2025) Reverse-correlation modeling of deficits of prosody perception in right-hemisphere stroke. PhD manuscript, Université Marie et Louis Pasteur, Besançon, France. pdf

Acknowledgements

The workshop is organized thanks to the continuous support of Fondation Pour l’Audition: Prix d’Emergence Scientifique, 2018 (to JJA), Bourse postdoctorale, 2020 (to EP.) and Appel à projet laboratoire, 2021 (to JJA and MV).

Fondation Pour l’Audition was created by Françoise Bettencourt Meyers, Jean-Pierre Meyers and the Bettencourt Schueller Foundation. It has been recognized as a public-interest organization since 2015. Its mission is three-fold: supporting research and innovation to offer new hope; informing the public to protect hearing health through prevention and awareness initiatives; and improving the lives of people with hearing disorders.

With more than 750 researchers, the FEMTO-ST Institute (CNRS/Université Marie et Louis Pasteur) was created just about 20 years ago, and has grown to become the country’s largest public CNRS engineering lab, with expertise covering all fields of system science. We’re located in Besançon, a world-heritage UNESCO site close to the French-Swiss mountains of Jura, within easy train access from Paris and most major european travel hubs. Besançon is a vibrant, mid-size regional capital city regularly ranking among the best in France for its quality of life and surface green spaces per inhabitant, but also boasts a newly-federated university (Université Marie et Louis Pasteur) of more than 50k students.